The Lure

Business Intelligence (BI) practitioners continue to hear about the tremendous return and impact of data mining and predictive analytics applications through reinforcing case studies across industries. It’s no wonder why so many organizations are striving to make their way down the BI development chain to arrive at a practice that offers self-validating prospective insight. These organizations are anxious to uncover and leverage the highly valuable intelligence hidden within their existing operational data.

The Catch

Like any endeavor with rich rewards, there are often numerous risks, barriers and pitfalls that stand in the way. In predictive analytics, those barriers are not in the typical places that a seasoned BI practitioner would expect. Time and again, those new to data mining fall to rookie mistakes.

But don’t take our word for it. We will reference two recent industry surveys that reveal the majority of data mining practitioners focus on the wrong end of the problem. And not surprisingly, a similar proportion fails to achieve positive results …or even know the difference.

With a complex practice like predictive analytics, it stands to reason though, right? Predictive analytics isn’t exactly like maintaining a checkbook, is it? So, what do you think is the greatest challenge to succeeding in predictive analytics…?

What Most Suspect Is The Biggest Barrier Is Not Even In The Top 90

If you guessed “technical complexity” you’re certainly not at all alone; it’s the most common response. But you would be far from correct. Sure, there are many advanced technologies and complex mathematics that are intimidating to new practitioners. But there are amazing wizards in a wealth of predictive analytics software solutions that effectively manage the neural networks, decision trees, logistic regression, genetic algorithms and other methodologies. Not realizing that modern software is very well equipped to allow general BI practitioners to build very good predictive models causes many to hesitate and suspend entry into the practice.

In reality, it’s not the paralysis of overestimating the tactical implementation, but the rush of underestimating the strategic approach that kills most data mining implementations before they begin. And it’s an expensive oversight because it leaves the practitioner wondering why their models are uninterpretable after having completed the process. Our mini-countdown will reveal the most critical yet elusive strategic pitfalls.

Don’t Bring Your Data Warehouse Project Framework To This Game

The strategic approach and project design for predictive analytics is substantially different than the other areas of BI. Unlike a data warehouse design which is similar to an engineering project, predictive analytics and data mining are a discovery process. And while several consortiums have standardized formal processes to accommodate discovery and iterative process, the practice remains riddled with common pitfalls.

Those who make the effort to educate themselves on the industry-standard approach to predictive analytics are nearly assured to reap residual returns — long before their counterparts who typically rush to acquire a tool and dive headlong into the data.

As we turn our attention to the most popular and impactful barriers to successful predictive analytics projects, you’ll recognize a common theme: they are all strategic. That doesn’t mean that tactics and methods are not impactful. There are many cases where incremental improvements in performance will realize substantial returns. But working on the slight edge of being a faster sprinter can work against you if you’re running in the wrong direction.

The Countdown

So, let’s touch on just five of the most critical and popular ways to sabotage an initial data mining implementation:

#5: Approach Predictive Analytics Like an Engineering Project

The effort and progress involved with a Predictive Analytics implementation is by no means linear. Predictive Analytics is the goal driven analysis of large data sets. The impact of large data sets is not viewed as a technical challenge. Rather, it is necessary to recognize that it is impossible for any individual, or small working group to know all of the relationships that your organization has with individuals and other organizations. In accepting that, we adopt an approach of dealing with group behaviors — that our best efforts are directed toward identifying groups that display behaviors of interest to us.

Not all individuals associated with a given group will display the behavior we are interested in at the same time. And specific individuals will display the behavior inconsistently. This leads us to the implication that the best we can achieve is an expected probability that an individual will display a behavior of interest at a rate consistent with the group to which they belong.

From there, we are interested in goal driven analysis. We must define a specific set of performance parameters. Those performance parameters are our goal. Our objective is to enhance our performance on those specific goals.

As simple as it sounds, this is where most organizations run into problems with their projects. They often either fail to establish specific performance parameters, or rely on analytic metrics that may have little, or nothing to do with the true business metrics of an organization.

Our Predictive Analytics project is the process of developing mathematical models that identify groups of individuals who display the behavior of interest at differing rates. This group identification allows us to discriminate in the allocation of our resources more effectively. In short, we are looking for a better way to break our relationships into groups so that we can allocate more resources to the groups that benefit us, and minimize the resources to those groups that have a negative impact our specific performance metrics.

The mathematical models we develop eventually become the decision strategies we employ to determine who is, and who isn’t, a member of a group that displays a behavior of interest. We typically develop a large number of candidate models, or candidate decision strategies, evaluate the performance of each based on our specific performance metrics, and select the one that gives us the highest level of performance.

The process of defining our business decision processes; identifying the data that helps us segregate groups into subgroups; building and testing models; and evaluating each of the candidate models performance on our performance metrics is highly iterative. In each step, we are likely to gain new insights, or run into dead ends that force us to back up, re-evaluate earlier decisions, and move forward again in a perpetual search for higher levels of performance.

#4: Gloss Over a Comprehensive Project Assessment

If you don’t know where you are, what you have, and where you want to go, how will you get wherever ‘there’ is? Yet, so many organizations pick a software solution from the vendor who made the most polished pitch, introduce the most readily available raw data and expect the HAL 9000 (from the Space Odyssey series) to recite the predictions that will streamline operations, cut losses and accelerate profits.

This kind of advice sounds so obvious, if not trite when you hear it. Yet, so few follow the initial steps for business and data understanding as outlined in various public domain industry standard process guides such as the Cross-Industry Standard Process for Data Mining (CrISP-DM).

Conducting a formal data mining project assessment has numerous valuable benefits. Not only does the assessment help to align resources and environmental considerations with objectives, it organizes alignment across teams and prioritizes the resulting cross section of qualifying projects. Or… it measures the distance and actions to the starting line, and averts initiating a potentially expensive project prematurely.

Most importantly, the assessment develops a project definition: the blue print adapted to the discovery process that establishes a formal yet flexible framework and clarifies expectations for both specific next stages and the overarching project phases.

Gloss over this exercise, and you’ll find yourself staring at data from the resulting model which looks somewhat different than what went into the model, but is no more meaningful.

#3: Focus on Methods, Tactics and Optimal Model Performance

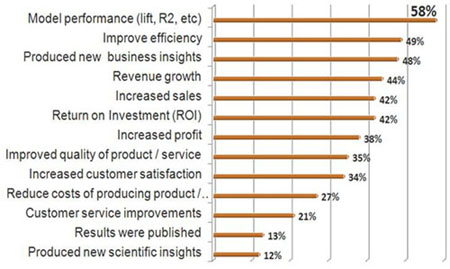

In their Third Annual Data Miner Survey, Rexer Analytics, an analytic and CRM consulting firm based in Winchester Massachusetts asked the BI community “How do you evaluate project success in Data Mining.” Out of 14 different criteria, a whopping 58% ranked “Model Performance (i.e. Lift, R2, etc) as the primary factor. In essence, it is more important to these survey responders to have very precise forecasts for unrelated situations.

From Rexer Analytics 3rd Annual Data Miner Survey by

Karl Rexer, PhD, Heather N. Allen, PhD and Paul Gearan

It is then no mystery that a survey conducted by KDnuggets(.com), a highly popular data mining resource site, revealed that over 51% of the respondents either did not achieve positive ROI, or could not calculate it.

No one in a business environment has ever gotten a raise, bonus or promotion based on R2, or any other analytic metric. One of the greatest threats to successful model development is building very good models that answer the wrong question. Experienced data mining practitioners readily recognize that a decision strategy that maximizes response rate may not be the same decision strategy that maximizes net profit.

However, far too many practitioners fail to recognize that the same logic applies to the relationship between analytic metrics and business performance metrics. There is simply no rational reason to expect that a model that has been developed to maximize R2, or Lift, will also maximize the business performance metrics that are of interest to our organization.

#2: Over-reliance on Software Solutions

As powerful as today’s software is, the reality is that it does nothing that we cannot do with a pencil, paper, calculator and enough time. Today’s software makes us more efficient… nothing more. It does not understand our domain; it can not conceive our business decision process; it will not determine an organization’s specific business performance metrics; it does not understand the resource allocation issues of an organization; it does not know the data structures of your organization’s data warehouse; or how you will implement your solution. All of those issues must be addressed by the project development team.

Today’s software will allow you to develop a large number of models using a variety of very sophisticated techniques in a relatively short period of time. It then becomes your job to evaluate those candidate models in the context of your organization’s performance metrics and confirm the appropriateness of adopting the model as your new decision process for this specific resource allocation decision.

#1: Lack of a Solid Project Definition

As the surveys mentioned above illustrate all too well, the single biggest reason that Predictive Analytics projects fail is because we don’t take the time to develop solid definitions and designs for our projects.

We would never consider construction of a building without a clear, well defined set of blueprints. We would go to great lengths to make sure that we know what we are building, and why. We would ensure that we know what materials are required, and whether or not they are available. We would understand whether the final building met our criteria, and what benefits were derived.

Why then, do so many organizations start Predictive Analytics projects with ill defined project plans; no clear development strategy; minimal analysis of available data and its appropriateness to the modeling effort we are considering; and no effective way of measuring our business performance metric enhancement or return on investment?

How To Skip The Other 96

The most important first step toward properly maneuvering implementation hurdles associated with predictive analytics is to first educate yourself. Even if you outsource the majority of the implementation, you will be better positioned to interact confidently with your team, understand strategic priorities and appreciate the dynamics of the discovery process. If time is not pressing, there are virtually limitless books on the topic if you have the discipline to really absorb them.

Beware of online education for predictive analytics. When it comes to data mining, online training is effective for a surface orientation or training sample at best. Do not pursue online training if you are seeking true functional knowledge and reinforced skills.

The fastest way to establishing a real-world understanding of data mining is to participate in instructor-led classroom training. The dedicated distraction-free and concentrated time, direct access to the instructor and interaction with related practitioners provides for deeper understanding of the strategies and tactics, practical benefits and lasting effects.

And when it comes to instructor-led training, there are a number of software vendors who offer great courses. In most cases, these courses restrict the view of predictive analytics to those methods represented in their tool.

However, there are just a few general data mining and predictive analytics course providers. You may reference general data mining and predictive analytics resource sites such as KDnuggets, the B-Eye-Network and AnalyticBridge to target a public offering and evaluate vendors. You will then be far better prepared to achieve measurable success on your initial predictive analytics implementation – placing you in the prized minority.

About The Authors

ERIC A. KING

After graduating from the University of Pittsburgh with a bachelor’s degree in computer science in 1990, Eric joined NeuralWare, Incorporated, a neural network tools company, as a senior account executive. In 1994, he moved to American Heuristics Corporation (AHC): an advanced software technology consulting company in West Virginia specializing in artificial intelligence applications.

At AHC, he performed as the director of business development for the commercial services division and started a training operation, The Gordian Institute. With the valued support of AHC, contractors, customers and family, in January of 2000 Eric founded TMA (TMA) in the spirit of establishing long-term professional relationships.

TMA provides guidance and results for those who are data-rich, yet information-poor. TMA produces a popular live webinar, “Predictive Analytics: Failure to Launch.”

THOMAS A. “TONY” RATHBURN

Mr. Rathburn holds an exceptionally strong track record of innovation and creativity in the application of Predictive Analytics in business environments. Tony has assisted commercial and government clients internationally in the development and implementation of Predictive Analytics solutions since the mid-1980’s.

As a Senior Consultant at the predictive analytics training and consulting firm, TMA, Mr. Rathburn delivers custom workshops and consults on a wide range of commercial assignments. His two-plus decades of real world experience makes him a sought after resource. He is a regular presenter of the Predictive Analytics Track at the TDWI World Conferences, as well as being engaged by a number of software vendors to present the practical implementation aspects of their tools.

Mr. Rathburn’s expertise is focused on the design and development of business models of human behavior. From an industry perspective, he has extensive experience in the financial sector, banking, insurance, energy, retail and telecommunications. He has an extensive functional area perspective, including the development of models for trading, pricing, CRM, B2B, B2C, fraud detection, risk management and attrition/retention.

Mr. Rathburn’s academic training includes seven years teaching MIS and Statistics at both the graduate and undergraduate level while an instructor in the College of Business at Kent State University. Prior to joining TMA in 2000, Tony served as Vice President of Applied Technologies for NeuralWare, Inc., a neural network tools and consulting company. He was also the Research Coordinator for LakeShore Trading, Inc., a successful futures and options trading firm on the Chicago Board of Trade. Tony is a co-presenter in a highly popular introductory predictive analytics webinar entitled “Predictive Analytics: Failure to Launch” and may be contacted by e-mail at tony@tma42.com.